Building a Local-First AI Stack on Mac M-Series

There are two kinds of people in tech today:

those who rely entirely on cloud AI… and those who build their own stack, tune their own tools, and sleep better knowing everything runs under their roof.

I’ve lived long enough in this industry to see the pendulum swing back and forth. Centralize everything. Decentralize everything. SaaS for all. Self-host everything.

But one truth never changed:

Control beats convenience — every single time.

And if you’re running a MacBook Air or Pro with an M-Series chip, you’re sitting on one of the best machines ever built for local AI experimentation. Silent, efficient, fast, and ridiculously stable.

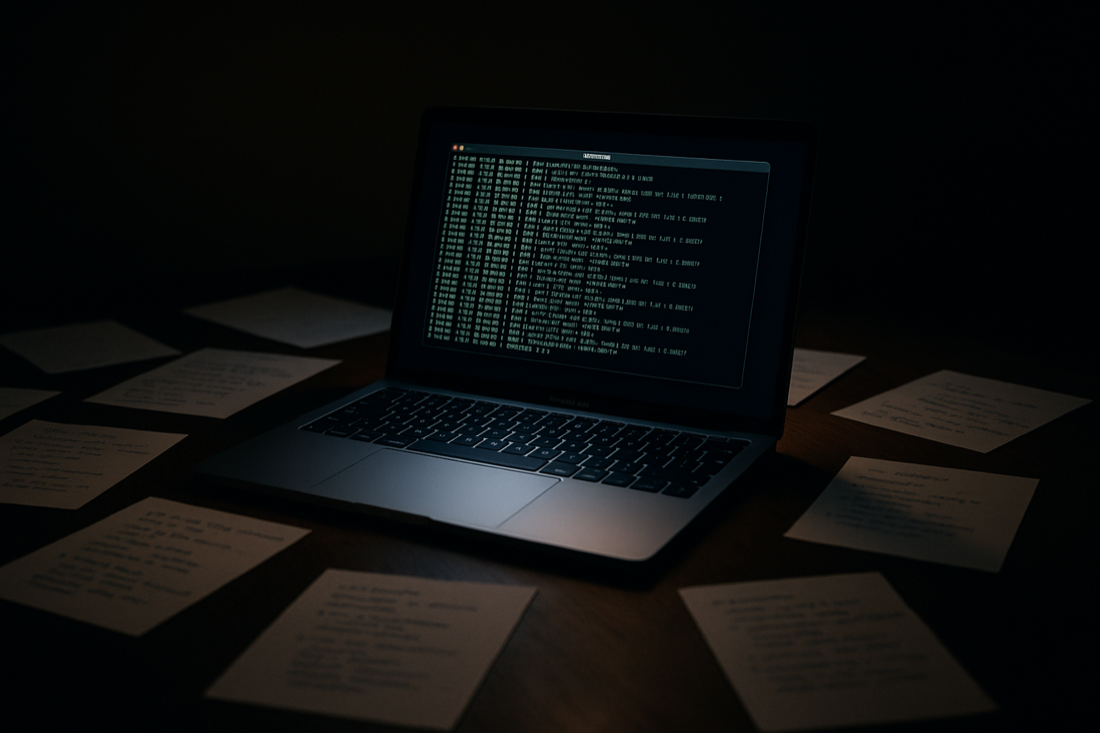

This is what a modern local-first AI environment looks like in 2026, built from the ground up on macOS.

1. Why Local-First Matters Right Now

Cloud models are powerful — no question.

But they come with strings attached:

• Rate limits

• Vendor lock-in

• Data retention policies

• Unpredictable costs

• API outages (the silent killer of any workflow)

• “We value your privacy” banners that mean absolutely nothing

Running AI locally is not just about privacy.

It’s about sovereignty.

It’s about building a workflow that keeps functioning no matter what Big Tech decides tomorrow morning.

And the M-Series architecture changed the game:

now you can run models locally without melting your laptop or borrowing a data center.

2. Core Components of a Local AI Stack (Mac Edition)

A solid local-first environment on macOS typically includes:

• Text + Coding Models

Ollama is the current king.

Simple, reliable, and optimized for Apple Silicon.

brew install ollama # start ollama server ollama start

Open a new terminal window, then:

ollama pull llama3:8b ollama pull qwen2:7b

If you write code, analyze logs, build tools, or automate tasks, this becomes your daily bread.

• Embeddings + RAG

For building personal knowledge bases, documentation agents, or indexing your company’s internal data.

Recommended stack:

• ChromaDB or Qdrant

• sentence-transformers

• A local LLaMA or Mistral model for retrieval

Your Mac can easily handle millions of embeddings without breaking a sweat.

• Vision Models (Image Analysis)

Apple’s unified memory architecture helps models like:

• Llava

• SAM (Segment Anything)

• InsightFace

• Florence-2

run surprisingly well.

This is the foundation for things like FraudTalon — image classification, QR code analysis, phishing detection, document inspection.

• Speech-to-Text (STT)

Whisper is the gold standard.

Optimized builds for M-Series are lightning fast.

brew install whisper

Great for:

• dictation

• meetings

• transcribing long tutorials

• training datasets for bots

• TTS (local-voice)

You can use:

• Piper (very lightweight)

• XTTS-v2 (heavier, but more natural)

• macOS built-in voices (underrated)

Great for creating content, accessibility tools, or batch audio generation.

3. Docker: The Real Backbone

Even with everything local, containers are non-negotiable.

With Docker Desktop or OrbStack:

• easy model isolation

• repeatable environments

• perfect reproducibility for your projects

• frictionless deployments to any VPS

• quick rollback if something breaks

This is how you build a serious personal lab.

4. How This Stack Fits Into Real Work

This isn’t theoretical.

This is what I actually use:

• Prototype AI agents

• Test fraud-detection pipelines (like my personal project, FraudTalon)

• Train small NLP models with spaCy

• Run log-analysis workflows

• Build Chromium-free security tools

• Draft technical content offline

• Experiment with embeddings and RAG

• Validate ideas before shipping them to production

Suddenly your Mac stops being “just a laptop”

and becomes a compact, silent, personal datacenter.

5. The Future Is Sovereign

Cloud AI will always have its place — especially for enterprise-scale workloads.

But individuals and small teams don’t need “infinite compute.”

They need:

• autonomy

• portability

• predictable costs

• privacy

• resilience

• flexibility

And a local-first stack gives you all of that, right now, today.

The M-Series made this future accessible.

The tools are ready.

The models are ready.

What’s missing is the mindset.

If you own your tools, you own your future.

What a lacre ihu